What comes after the chatbot?

Notes and image credits for my talk at the AAC&U AI Institute

This essay is based on a talk titled “On Beyond Chatbots: Purpose-built AI Tools for Solving Academic Problems” that I gave as part of the kick-off for the AAC&U’s Institute on AI, Pedagogy, and the Curriculum, held September 11-12, 2025. One goal for the Institute participants this year is learning about the practical implementation of AI technologies, so the presentation focused on using specific AI tools that go beyond the chatbot.

My work as a consultant brings me into contact with a lot of technology companies, and sometimes they give me access to their products at no cost, and occasionally they pay me to talk with them or give a keynote at a conference. That raises questions about influence and influencing, and so please indulge this effort at transparency.

The opinions I share here on AI Log and in talks like this one are my own. I do not accept payment to mention a product or company or to say anything specific about the products or the companies I discuss. I have a disclosures page on my website so that my readers can determine for themselves to what extent I am “a shill for AI,” an accusation once leveled at my colleague, Lance Eaton.

Chatbots are an old technology

Three years ago, if you asked someone at OpenAI about putting a chat interface on their latest generative pre-trained transformer, they would have shrugged. At the time, OpenAI was super excited about their image generator, DALL-E 2, and the big AI story of the summer was about Google firing an engineer who believed the language model he worked on had become sentient.

There was no reason to believe chatbots were anything more than a novelty or an annoyance. After all, AI chatbots are more than fifty years old. Remember Clippy? Remember the early versions of Siri and Alexa? Heck, remember the last time you used Siri or Alexa!

Most AI researchers and product developers in 2022 believed that the chatbot was AI's past. AI's future was going to be something... futuristic.

The product developers who released ChatGPT in late 2022 did so as an experiment, a way to gather data. They were as surprised as everyone else at the result: the creation of what is often described as the fastest-growing computer application in history.

We don’t have time to go deep into why ChatGPT took off the way it did, but one big reason had to do with the collective sense that it was Shel Silverstein’s Homework Machine made real. The resulting homework apocalypse panicked educators and inspired pundits, resulting in a constant stream of over-excited speculation about what AI means for education.

You don’t learn much during a moral panic, but I believe that ChatGPT’s value, so far anyway, is in how it forces us to face important educational problems that long predate generative AI. Schooling has increasingly become a matter of measuring meaningless outcomes and not fostering student learning. As John Warner put it a few weeks after the release, ChatGPT can’t kill anything worth preserving.

Chatbots are yet another screen-based, attention-grabbing social media. Talking to “Chat” about your problems is just one more thing students do that isn’t spending time with others. The Eliza Effect, the tendency to treat chatbots like they are people, is now a mass phenomenon. Again, ChatGPT did not cause these social problems, and neither is it the answer.

Nearly three years into the GPT revolution, I think that the AI chatbot phase of this new technology is winding down. Today, we will look at a handful of tools that illustrate what I mean, but first let me offer a frame that avoids some of the distracting hype and speculation about AI.

Generative AI is normal technology

AI Snake Oil is my favorite book on AI from 2024. The authors, Arvind Narayanan and Sayash Kapoor, write one of the best AI newsletters. Their new book project, AI as normal technology, and their relaunched newsletter under the same name, offer an alternative to the hype about imminent superintelligence and predictions of an immediate transformation of work and education. Treating generative AI as normal technology focuses our attention on the social processes of adopting this new social technology rather than speculation about the unknowable future it may bring.

Excitement about how many people have AI companions or used ChatGPT to do homework obscures the wider potential impacts of the Generative Pre-trained Transformer. The hype around this invention is normal, even though the scale of capital being deployed to develop and promote generative AI is unprecedented. But all that money and hype won’t do much to speed up the pace of organizational change. This frustrates the big AI companies and their investors, as well as enthusiastic early adopters looking for evidence to back up their predictions.

The speed of progress happens at the speed of humans and institutions. Electricity, the internal combustion engine, and the electronic computer all took decades to understand, to innovate, and to commercialize. Those technologies didn’t feel normal when they were invented; they became normal through social processes of adaptation and adoption. Generative AI will be no different. This leads me to my favorite “law,” which is about AI but applies to so much more.

Let me emphasize a point Naryanan and Kapoor make: calling generative AI normal technology is not to discount its impact. These tools are powerful, and their capabilities have improved rapidly over the past four years. Transformer-based AI models are changing how we understand culture, education, and the relationship between language and mathematics.

Alison Gopnik and her colleagues help make sense of that change when they argue that transformer-based AI models are cultural technology, analogous to the printing press, the open-stack library, or the graphite pencil, each one normal in the sense that it took decades, and even centuries, for humans to understand and make use of them.

What do large AI models actually do?

What do large AI models like Claude and GPT do besides simulate a conversation and do homework? The range of answers is vast.

Here are three things that AI models do that I will show you today:

They provide natural language interfaces to unstructured and semi-structured data.

They are used to develop purpose-built tools that solve specific problems, including academic problems.

They can be added academic and business processes, making them more complex, and therefore, more powerful and adaptable. This is often called orchestration.

The best way to think of how large AI models provide interfaces to data is to briefly review the history of information storage and retrieval systems. When I started my career, we used this technology, developed at the end of the nineteenth century.

Slowly, over the past thirty years, we adopted this technology.

Today, thanks to powerful large language models, we can now talk with our data by typing into a box or speaking into a microphone.

Chat interfaces are but one example of how AI models can help understand data. Let’s talk about some others.

Natural language search

My favorite example of natural language interface is this story from The Markup, from back in April. The headline is scary, right? AI managing a nuclear power plant sounds like the plot of a B-movie.

But as the article explains, AI is not managing anything. The product is a natural language search engine that uses “open-source sentence embeddings” to search through nuclear data to help humans find what they need—very useful if you work in a nuclear power plant, or just about any other enterprise that seeks to manage information and knowledge.

Here is how the article describes what’s going on at Diablo Canyon.

The artificial intelligence tool named Neutron Enterprise is just meant to help workers at the plant navigate extensive technical reports and regulations — millions of pages of intricate documents from the Nuclear Regulatory Commission that go back decades — while they operate and maintain the facility.

The state of California recently extended the plant’s approval to operate through 2029. That means workers there are facing hundreds of decisions to replace or extend their safety equipment, looking at depreciation schedules, and updating procedure manuals.

Millions of pages of documents going back decades!

To be clear, the algorithm decides nothing. All the AI model does is find the relevant regulations and guidance for each decision the workers have to make, so the workers themselves can interpret the rules and decide what to do.

Given the overwhelming amount of digital information we contend with, having a tool that helps sort through unstructured data to help find what’s relevant is useful. There is nothing that requires jumping to have it automate decisions.

Natural language search of academic data

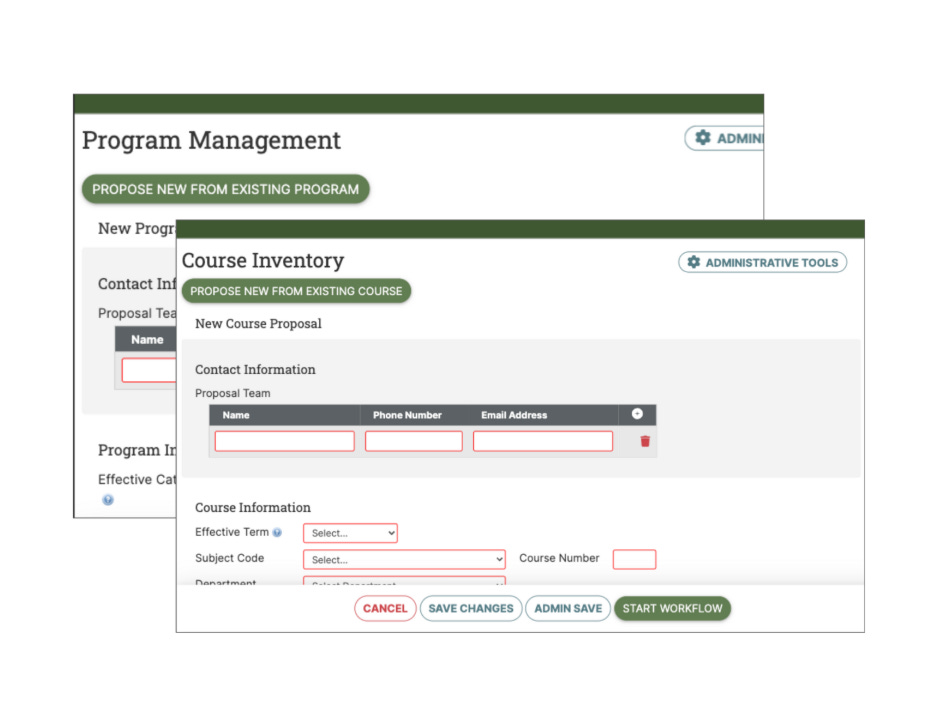

Some of you are on campuses that use Courseleaf, a platform that manages academic processes like course rostering, registration, and academic planning. One of their modules is Curriculum Manager. This is a system faculty members use to propose new courses, and administrators use to manage the curriculum review and approval process.

As anyone who has proposed a new course or is involved in reviewing curriculum proposals knows, one of the big questions in curriculum development is what else is out there—either already existing courses or thoe being proposed—that is similar to what you are proposing? You don’t want to poach students from another program with your new course…or, maybe you do, but you want to know that before it goes to the dean for review. A keyword search might get you a list, but we all know that jargon and specialized language in each academic discipline make identifying subject matter overlap difficult.

Courseleaf is developing a feature that, just like Neutron Enterprise, uses natural language search to find similar courses at your institution, both existing courses and those that are currently under review.

Of course, you could ask ChatGPT or Copilot to do that sort of task, but think about the points of failure with a chatbot. You would have to get it to ingest a huge amount of institutional data. It might confabulate or hallucinate some or all of its answers. Talking with it might be fun, but really, you just want a list of courses, not a little buddy telling you how insightful your questions are.

Just like AI note-takers and customer service bots have been appearing everywhere, this kind of advanced natural language search is coming to platforms and apps. Instead of Clippy-like companions and co-pilots, we will see more built-in natural language functions to existing products that retrieve the information you want and help you take action on it.

A chat+ interface to the Web

Adding natural language functions to your internet browser reimagines a tool we take for granted. Instead of a Google search box or a chatbot, you may soon find yourself using natural language functions that add layers to chat so that you can interact with the World Wide Web.

All the big AI companies are working on some version of this, but The Browser Company’s Dia seems to be a little ahead of the curve. This concept is a little hard to explain, so here is a video featuring students explaining how they use Dia, and here is blogger and professor of economics, Brad DeLong, describing his experience using it, and designer Jurgen Gravestein writing about the trend of AI powered browsers.

While there are reasons to be skeptical about English becoming the hottest new programming language, it does seem clear that using natural language to understand the data in your browser tabs is useful. Features like Courseleaf’s “similar courses” and redesigned browsers like Dia point toward a future where natural language functions within existing technology, rather than as an external co-intelligence you talk to.

Purpose-built to solve academic problems

This next example flips from data interface to data analysis tool. As I said earlier, AI is changing how we understand the relationship between language and mathematics. I’d love to talk about how the digital humanities is rising to these questions, but I promised to stay focused on the practical tools. So, let’s talk about a tool that harnesses a large language model, which, after all, is the application of mathematics to language, to understand and solve bureaucratic problems.

I managed Penn’s course evaluation system for many years. It always made me sad to think about all the information we gathered from student comments that never went beyond the instructor. Some department chairs might read through them, or maybe cherry-pick a few good comments for a promotion packet. But mostly all that information just sits there in a database or warehouse.

Think back to my example of a purpose-built language model searching through nuclear-related data. Explorance MLY is an AI model built for analyzing text-based information, a cousin to Neutron Enterprise, that can function in a similar way for course evaluations or any other database of natural languge feedback. It includes a classification mechanism that provides sentiment analysis and sorts comments into categories.

Here is Explorance’s CEO, Samer Saab, talking about MYL in the context of responsibility:

MYL offers practical value in its ability to identify information in the unstructured course evaluation data. Say you want to know what students had to say about a specific classroom or compare how students describe small seminars versus large lecture courses. Or, to bring up a pet project of mine, let’s say you wanted to read all comments specifically about active teaching methods, even those for courses that do not use such methods. To get even more practical, let’s say you wanted to screen potentially biased or harmful student comments before they are sent to brand-new teachers. MYL helps you do that.

Teaching as orchestration

We covered natural language beyond chat and purpose-built models. Now let’s talk about the last, and in many ways, the trickiest of the ways I see us moving beyond chatbots. What happens when we add one or more generative AI tools to academic or business processes, making those processes more complex? This involves orchestrating the work of the models themselves along with the work of the humans involved. Here is a simple example drawn from my teaching.

Last fall, I worked with the folks at the Penn Center for Learning Analytics to use a large language model tool called JeepyTA in my history class. I wrote about the experience here.

Given the lay offs and hiring freezes along with the fundamental inability of large language models to replace human teachers, I am always careful to describe JeepyTA as an instructional tool, not a teaching assistant. The frame of AI-powered digital twins or All Day TAs cedes too much to the tech oligarchs and cost-cutting bureaucrats who dream of replacing teachers with chatbots.

I use JeepyTA narrowly, to add a step to the process of peer review. Here is how I described it in a previous essay:

Rather than have JeepyTA emulate how I give writing feedback in order to substitute for feedback I would give, I conceived its role as providing an additional layer of feedback, a preliminary step each student takes before showing a draft to a peer or a member of the teaching team. We configured JeepyTA to give this feedback by post-training it on the assignment and examples of previous first-draft feedback.

At our first peer-review workshop, each student had a peer essay + JeepyTA’s feedback to review. This had the effect of helping students get past their hesitancy to criticize a classmate’s writing because they started with machine-generated feedback. My students and I believe the simulated feedback helped jump-start peer review. I will use the same technique for the course I am teaching this semester.

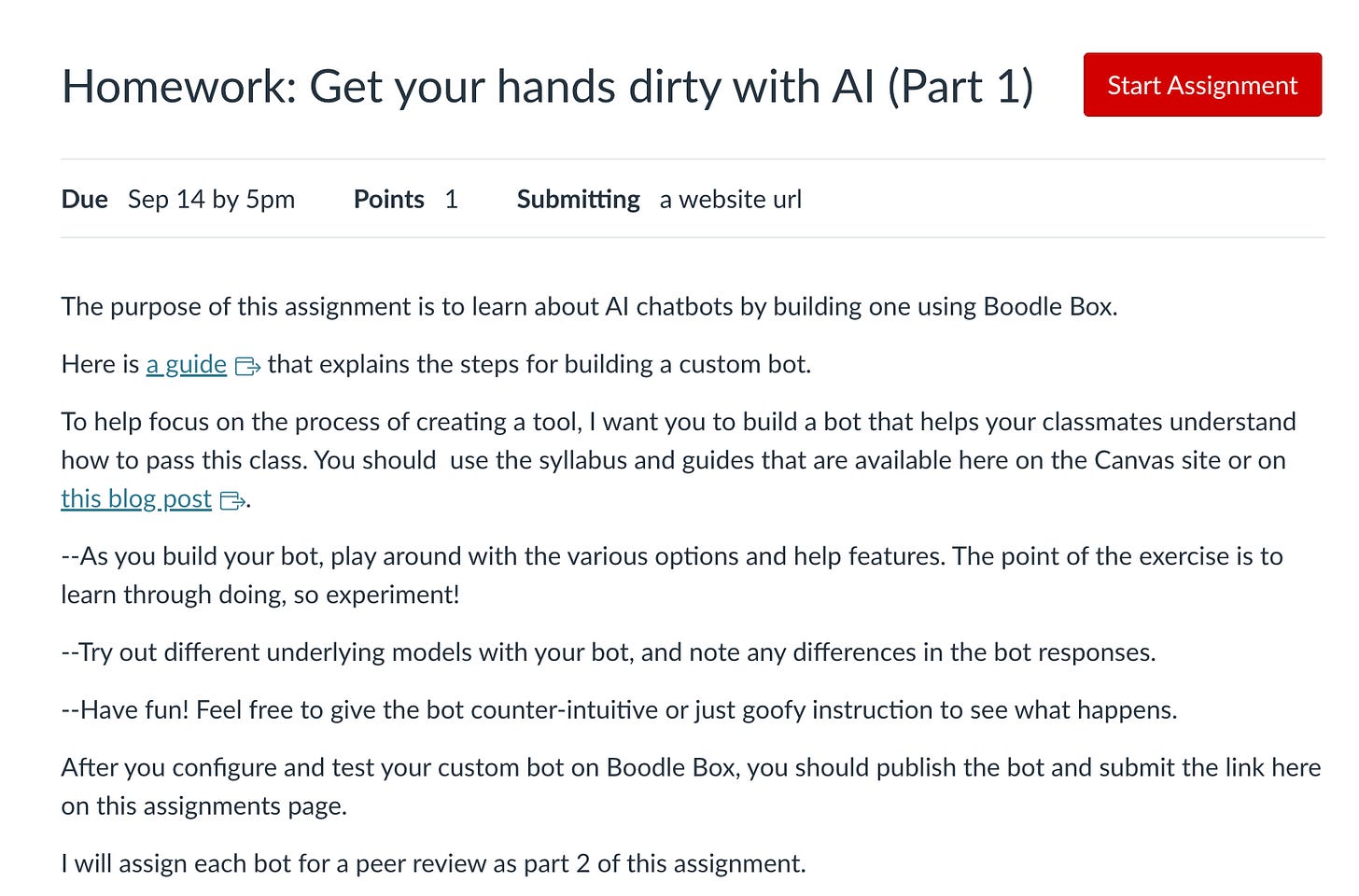

That course is called How AI is Changing Higher Education (and what we should do about it), and, given the subject, I wanted to give students some practical experience building AI tools. For that, they are using Boodle Box.

Orchestrating class assignments

The assignment involves what I call getting our hands dirty by working with AI. We are all familiar with the dilemma of spending hours crafting a syllabus and assignment guides only to have students not read them. This week, I asked my students to create what Henry Farrell calls a course syllabus that speaks as a way to have students engage with my course materials and experiment with generative AI tools.

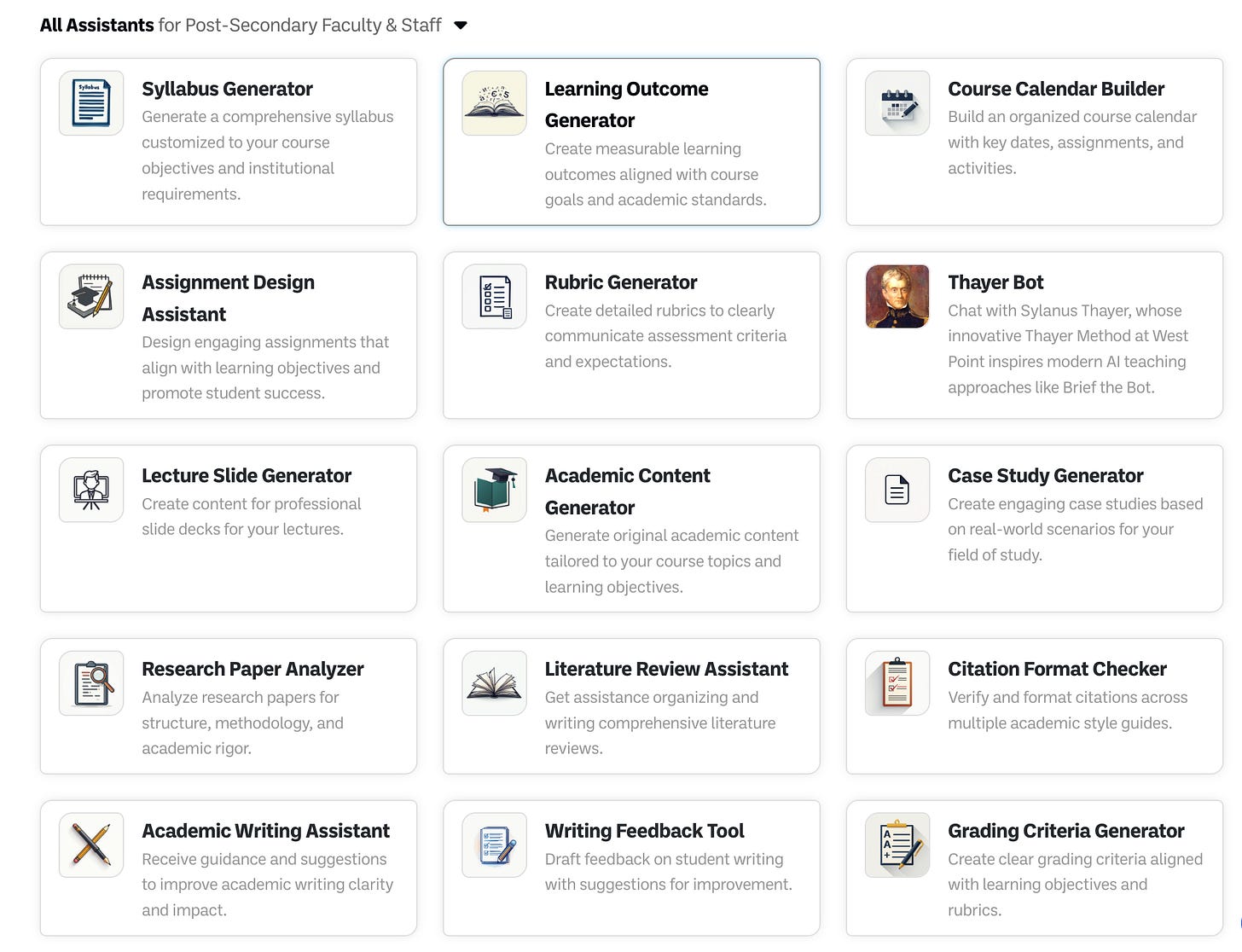

Using BoodleBox as an AI toolkit, each student will develop a custom bot designed to answer questions about how to succeed in the seminar. They will experiment with using different AI models (the ability to switch out models is a feature of BoodleBox). Then, we'll conduct peer review and testing of the bots and compare the experience of talking to an AI bot with the old-fashioned method of reading the syllabus and course guides.

Note what I am doing here with JeepyTA and BoodleBox. Instead of an AI chatbot helping me design lesson plans or answer questions when I’m unavailable, I am managing various AI tools, orchestrating their use for specific purposes, while also directing the activities of my students. All this is to create opportunities to learn about the application of AI tools in higher education.

If you want more complex examples of orchestration in teaching, the place to go is large introductory STEM courses. As a participant in the talk pointed out, one of the best-known examples is Eric Mazur’s work developing tools like Perusall and using them for peer instruction in Physics. Increasingly, tools built from large AI models are being used for a variety of narrow instructional purposes.

I don’t have examples in the administration of higher education of complex orchestration. (If you know of any, please get in touch!). I’m grateful to the participant in my talk who asked me to explain what I mean by orchestration.

The best example I can come up with is Reuters, which has been adopting and adapting new information technologies since the telegraph. This Decoder interview with Paul Bascobert, describes how operations of a newsroom spread across the globe are being transformed by the incorporation of large language models and other types of AI. As you'll hear, this is far more than chatbots and copyediting.

If you want to get a sense of thinking about AI models working together, try out BoodleBox and explore the collaboration tools they have just added to their platform. You might do what my students are doing this week: build a custom AI tool to help accomplish a task, and then think about how to incorporate it into the processes of your course or your office.

Higher education should shape the market in AI tools

At the end of the the session, one of the participants talked about the value of using smaller, open source models instead of the latest and largest models. This is a view I share. So much attention is focused on the giant technology companies and their products that alternative models or companies rarely enter the conversation on campuses.

These giant companies have made giant bets on AI chatbots, and it is not clear whether those bets will pay off. Competition is fierce and growing users numbers is all important. How much will people pay for a chatbot subscription? What happens when the costs of producing large AI models decreases and competition increases? These are important questions…for AI companies.

Higher education has enough problems without borrowing those of Google and OpenAI. Educators should keep this in mind as the pressure to speed up progress on AI adoption continues. There are economic and ethical reasons to use small, open source models, and to buy products from companies who act responsibly, not just talk about “responsible AI.”

Educational institutions should refuse to work with companies that “move fast and break people.” To the extent that faculty and teachers have a voice in institutional decision-making, we should call out companies that quietly erode data privacy while giving their product away for free to students, and demand procurement processes take into account ways AI companies are commercializing their products.

Given their track record of dissembling and illegal behavior, why would any college or university do business with OpenAI? We should insist on better from companies looking to be our “AI partners.” There are hundreds of AI companies competing for our business. Let’s work with those that take care not to harm our students.

AI Log, LLC. ©2025 All rights reserved.

I enjoy talking with people about how AI is changing higher education and what we should do about it. I’d love to come to your campus and talk with you and your colleagues. Click the button for more information.

Looks like education might finally catch up with content professionals in tech writing and knowledge management!