After the AI bubble: ChatGPT as an off-modern educational technology

We do not ride on the railroad; it rides upon us. Did you ever think what those sleepers are that underlie the railroad?

—Henry David Thoreau, Walden

For anyone vaguely aware of economic history, the question of “what’s next with AI?” must, at least occasionally, involve thinking of tulips, the South Seas, the railroad mania. And, pets.com.

Brad DeLong says, “The ghost of 2000 haunts the present moment. Are we, once again, mistaking via speculative exuberance the magnitude and immediate profitability of what really is genuine transformation?”

My students and my economics bloggers have convinced me that transformer-based cultural tools we call generative AI are a genuine transformation, one we have barely begun to figure out. The technology is built atop decades of investments of financial and cultural capital in the World Wide Web and the uses of data collected there. As Dave Karpf put it last year, the promise of generative AI is “what if Big Data, but this time it works.” It seems to be working. For some of the people. Some of the time.

How many people and how much of the time are hard to measure. We can see that it is a better Clippy, a natural language interface, a code generator, a homework machine for knowledge workers and students. And, for those who choose to use it in this way, a tool to visualize, interrogate, and better understand what Eryk Salvaggio of Cybernetic Forests calls “the gaps between datasets and the world they claim to represent.”

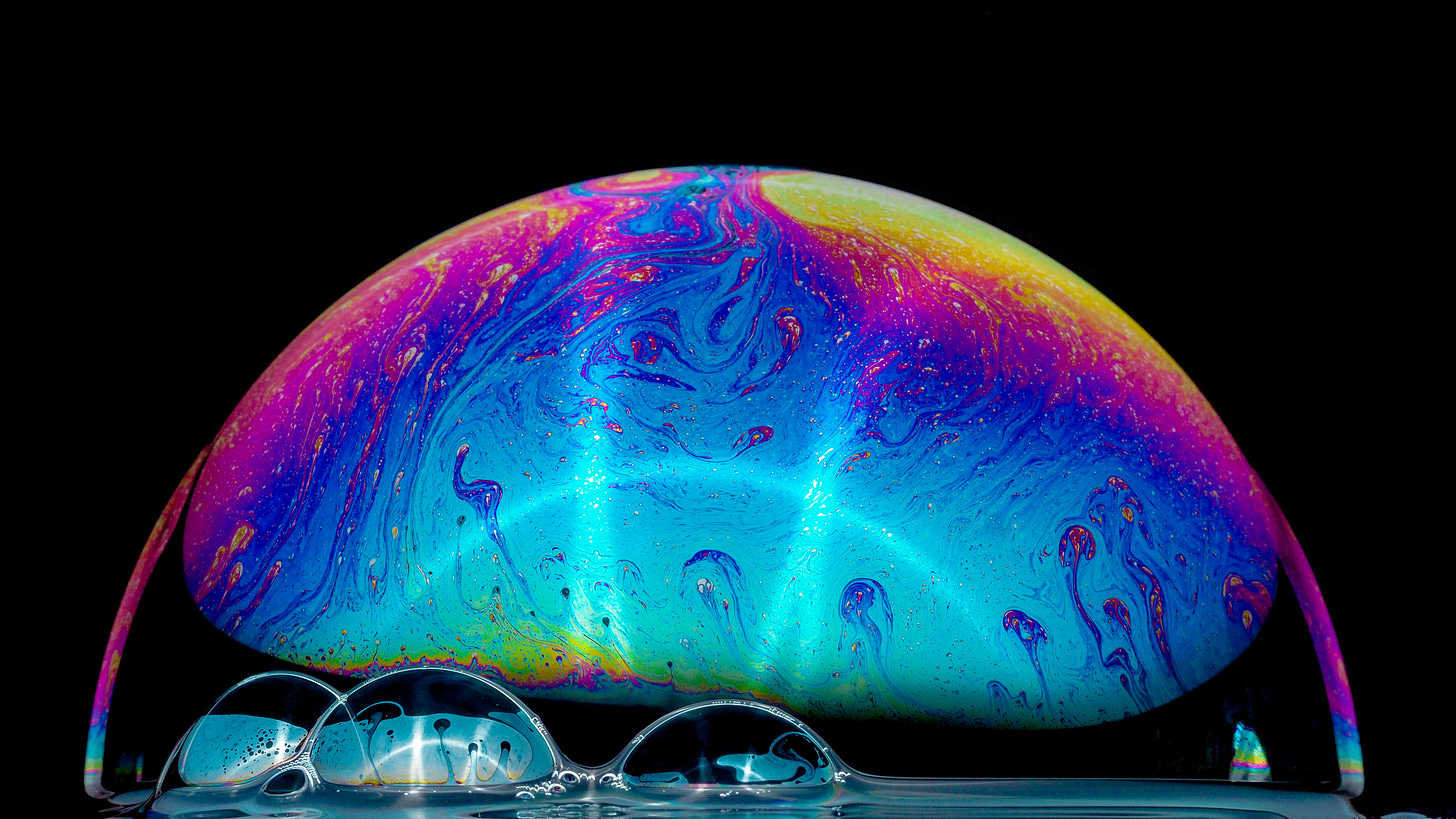

AI does not hold up a mirror to the human mind. It provides tools to manipulate and learn from giant cultural and social datasets. It teaches us about the difference between what large AI models confabulate from datasets and what humans understand about the world. The gaps are difficult to see through the soap film of a bubble. Yet, with every study showing the gaps persist, every system card reporting hallucination rates, every new paper coming out of Shaping the Future of Work, every post by Gary Marcus saying I told you so, every time someone tries to get an output to match the hype, the surface tension becomes thinner.

The dynamics of this bubble operate through successive demonstrations of new models and new capabilities that obscure the space between what the last demo promised and what the models delivered. To believe what Sam Altman or Dario Amodei say is happening requires suspension of disbelief similar to watching a science fiction film. The premise that simulating human conversation is the equivalent of simulating human thinking has been the basis for films from Metropolis to 2001: A Space Odyssey to Her. Each has an AI character who demonstrates its capacity to think by speaking, human-like, on a screen. When the movie ends, the sense of our lived experience returns and we can reflect on the fiction. Those unable to navigate that return suffer from delusions.

About those delusions

Ben Riley is concerned about those with mental health problems interacting with AI chatbots. He’s right. We should be trying to better understand the harms to actual humans of using these tools. Do the natural language capabilities of this cultural technology make it more dangerous to people with mental illness than previous technologies? It seems possible. As realistic as a movie feels, it does not call you by your name. The short-term financial incentives for companies to ignore such questions are strong. Yet, like the copyright violations being adjudicated, the possibility of widespread psychological harm from using large AI models gives substance to the ghost of 2000.

There is more than one ghost from the first decade of this century haunting this latest burst of enthusiasm for AI. For example, digital social media engenders democracy and human connections. That dream died as Google pivoted from avoiding evil to holding hands with it. Facebook went from a neat way to connect with friends to a business model for producing compulsive behaviors. Amazon built convenience on the ruthless extraction of value from drivers and warehouse workers and creepy surveillance of those workers and its customers.

Another dead dream from 2000 is that digital technology reduces operating costs and headcount for organizations. Don’t blame the technology for this failure. Just as the death of the Web was laid at the feet of consumers making bad choices, the failure of the digital transformation to return on the investments was blamed on workers. Change management is expensive and, in the end, ineffective.

As Svetlana Boym puts it, “In the advanced technological lingo the space of humanity itself is relegated to the margin of error. Technology, we are told, is wholly trustworthy, were it not for the human factor.” Consumers are to blame for their bad habits with screens. Workers are to blame for their misery with the enterprise technology.1

The latter helps explain the appeal of AI chatbots as worker replacements. AI will happily produce outputs at relatively little cost to humans. It’s not just salaries. Retirement and healthcare benefits are expensive. AI models do not ask annoying questions or raise objections. No hard conversations about why there won’t be raises this year or why coming into the office four days a week is “mission-critical.” No questions at town halls about social justice or health plans. AI offers a delusion for executives who just want technology to work as promised, and workers to be less of a bother.

There is a sad desperation to the dream of using AI to replace human workers. There are also billions of dollars being spent to make it a reality. Yet, reality is not cooperating. Generative AI stubbornly resists: it cannot deliver, unerringly, the results expected. At best, it can automate specific tasks, as long as someone who understands the job double-checks the outputs. Embarrassingly, the mistakes are clearly the fault of the AI models, not the humans.

Boym sees potential when technology fails: “this margin of error is our margin of freedom.” “Broken-tech,” she calls it, and says, “It cheats both on technological progress and on technological obsolescence.” By persistently offering outputs that humans can plainly see are wrong, and not just wrong, but weirdly wrong, generative AI identifies itself as broken-tech. And broken-tech has value as it fails to conform to modern bureaucratic expectations.

In One Useful Thing and his book Co-Intelligence, Ethan Mollick writes about embracing generative AI’s weirdness. His answer is to anthropomorphize AI models to help manage the tendency for outputs to appear strangely human-like and wrong. As he puts it, AI is not good software. It is pretty good people. He is only half right, but understanding that generative AI is “not good software” is as important as recognizing that it is not good people.2

Off-modern educational tools

Transformer-based large generative models developed using reinforcement learning and trained on huge datasets of human culture are weird tools that do not fit the premise of the stories being used to sell them. Technology companies are desperately shoving a weirdly shaped peg into a precision-drilled, circular hole. In so doing, they have created a speculative bubble that is both financial and existential. What happens when all the gas inside the bubble dissipates suddenly with a pop, or slowly, with sneaky little releases that redefine superintelligence as a gentle singularity?

Probably nothing good. So much depends on the social and political context and whether there is a flame present. But let’s indulge in some speculation of our own. Let’s imagine the result is to clear the air so that we can see the potential afforded by generative AI.3

This is why I find Svetlana Boym’s notion of the off-modern useful. Her manifestos help frame transformer-based, large AI models not as a modern information technology, but as an off-modern educational technology.

In Boym’s formulation:

Off-modern follows a non-linear conception of cultural evolution; it could follow spirals and zigzags, the movements of the chess knight and parallel lines that on occasion can intertwine asymptotically. Or as Vladimir Nabokov explained: “in the fourth dimension of art parallel lines might not meet, not because they cannot, but because they might have other things to do.” As we veer off the beaten track of dominant modern teleologies, we have to proceed laterally, not literally, and discover the missed opportunities and roads not taken. These lie buried in modern memory like the routes of public transportation in the American cities that embraced car culture a little too wholeheartedly. Off modern has a quality of improvisation, of a conjecture that doesn’t distort the facts but explores their echoes, residues, implications, shadows.

The potential for generative AI as an off-modern educational tool is similar to the potential of autonomous vehicles for urban redevelopment. Writing in The Guardian, Adam Tranter urges us to avoid the mistakes of the past as Waymo and other AV companies begin to expand.

Unlike human drivers, AVs thrive on strict rules, structured environments and predictable behaviour. The messiness of human movement is challenging and a threat to AV adoption. That’s why “jaywalkers” are flagged as an operational challenge, because autonomous systems can’t easily deal with real people doing ordinary things. The risk is that instead of adapting cars to people, we’ll yet again redesign streets to suit machines.

Silicon Valley expects that we will redesign educational institutions to suit their new machines. There is nothing new in that expectation, but the teaching machines of the past were all modern. They followed straight lines of optimization and efficiency in their operations. Attempts to implement them were driven by what Audrey Watters in Teaching Machines: The History of Personalized Learning calls “the teleology of ed tech”: Adoption is inevitable; resistance to making school “more ‘data-fied,’ more computerized, more automated” is futile. The failures of ed tech are always due to the human factor; the teachers just don’t get it.

The teleology of ed tech has framed efforts over the past two years to deploy generative AI in schools at all levels. The difference this time is not that the tools fail to fulfill their promise; it is that large AI models fail in ways that betray their nature. ChatGPT is off-modern.

A defining characteristic of large AI models is that they spiral or zigzag, introducing errors that no human would make. It is a better Clippy, a better tutor, and a better Eliza, except when it isn’t. When it does something strange or unexpected, when it fails to be a properly modern assistant, tutor, or therapist, it teaches a lesson about living in the modern world.

Silicon Valley executives and AI enthusiasts insist that the simulated cultural outputs are equivalent to human thinking. Many people have invested exuberantly in that promise. As that promise deflates, the off-modern potential of this cultural technology will become more visible.

AI Log, LLC. ©2025 All rights reserved.

Svetlana Boym is a visual artist and cultural theorist. She died in 2015 as she was working on a writing project called The Off-Modern Condition. Some of her essays about the off-modern are available online at https://sites.harvard.edu/boym and were published in The Svetlana Boym Reader, 2018. Bloomsbury Academic.

See also, Mollick’s Embracing weirdness: What it means to use AI as a (writing) tool and my review of Co-Intelligence.

Visual artists seem more attuned to the educational potential of generative AI. I mentioned Eryk Salvaggio. Celine Nguyen of personal canon is another example. Her good artists copy, ai artists ____, and this review essay about Chaim Gingold’s Building SimCity: How to Put the World in a Machine are wonderful explorations of the cultural and educational potential of generative AI.

Brilliant work as usual!

It cannot *not* happen that AI/LLMs in some way teach the layer of general knowledge we call "general education" within two years, to receive a GED+. This ought to be done at the high school level but there's too much state and local political infrastructure to allow this to happen anytime soon. So it will happen at the state higher education system level. 'Passing' this layer will be standardized. Subsequent study will be discipline- or career-specific.